EN: The Dance of Innovation: AI's Journey Through Springs and Winters

Many of us started exploring AI in late 2022, when OpenAI, a research and deployment company (partnered with Microsoft), made AI accessible to the general public with the release of ChatGPT-3.5. This model’s version became widely accessible because it offered powerful AI capabilities through a simple, free-to-use chat interface that anyone could access through their web browser, requiring no technical knowledge or special software to use.

This chatbot that could write essays, explain complex topics, compose poetry felt like a technological revelation, and its journey began centuries ago. Picture two robots, manipulating a jump rope in an S-curved pattern. They mirror the story of AI —a tale of soaring heights and declines - periods of AI springs and winters.

From Mechanical Calculators to Neural Networks: The Early Foundations (1642-1956)

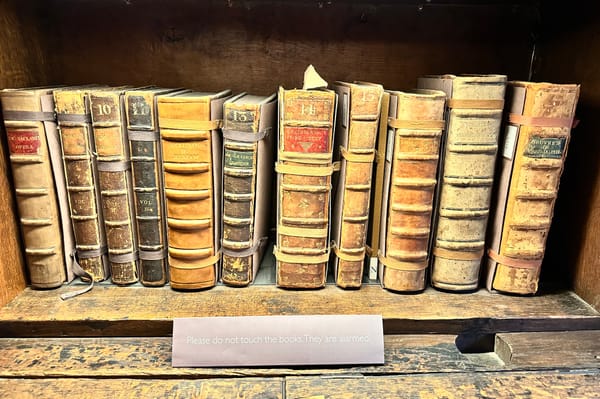

The story begins in 1642, when French mathematician Blaise Pascal invented a mechanical calculator. Then, nearly two centuries later, in 1837, English mathematicians Charles Babbage and Ada Lovelace designed a programmable machine in London. However, the inventors didn’t believe those machines could be truly intelligent.

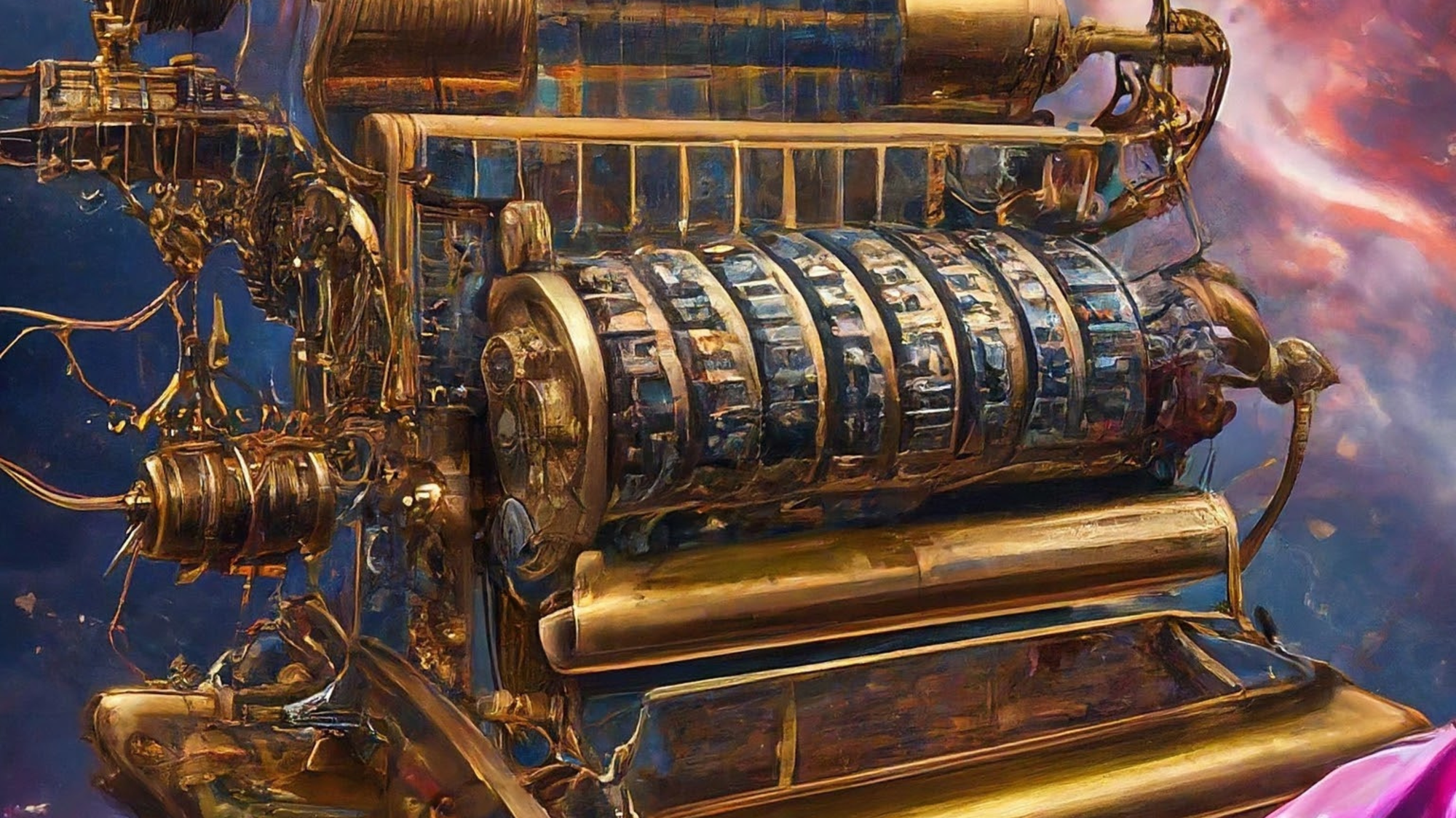

In 1936, British mathematician Alan Turing published “On computable numbers”, a description of the universal machine in Proceedings of the London Mathematical Society. Later, he worked in Bletchley Park and developed the Bombe machine, which helped to break the Enigma code.

In 1943, two American scientists from the University of Chicago - Warren McCulloch and Walter Pitts, developed an idea further and made a crucial connection: the human brain's neural networks could serve as a model for computational power. Seven years later, in 1950, Alan Turing, who already worked at the University of Manchester, published his paper "Computing Machinery and Intelligence”. He asked the provocative question: "Can machines think?". The Turing Test, proposed in this paper, became a touchstone for AI development, challenging researchers to create machines that could convincingly mimic human intelligence. In such tests, a human evaluator asks questions to the machine and human. If the evaluator couldn't reliably distinguish between the human and machine responses, the machine would be considered to have passed the test. While passing the test didn't necessarily prove true intelligence, but rather the ability to simulate human-like responses convincingly, it became a fundamental benchmark for measuring machine intelligence.

So, 1950 is usually considered as AI establishment; however, the term artificial intelligence itself was introduced six years later, in 1956, at Dartmouth Summer Research Project on Artificial Intelligence – DSRPAI.

The Cyclical Nature: Spring (1956-1974)

This workshop in New Hampshire, funded by the Rockefeller Foundation, marked the beginning of the first AI Spring. The event brought together legendary figures: Marvin Minsky, who would later co-find the MIT AI laboratory; John McCarthy, the workshop's host; Nathaniel Rochester, designer of IBM's first commercial scientific computer; and Claude Shannon, the founder of information theory. Their shared vision of building machines that could simulate human intelligence set the course for decades of research.

The first spring bloomed quickly. John McCarthy created the LISP programming language in 1958 at MIT, giving AI researchers their first specialised tools. By 1966, ELIZA, developed by Joseph Weizenbaum at MIT's Artificial Intelligence Laboratory, was engaging in rudimentary conversations with humans, becoming the world's first chatbot. Government funding flowed freely, and the future seemed boundless.

The First Winter: Government Funding Freeze (1974-1980)

The first AI winter descended from 1974 to 1980 across both North America and Europe, as researchers' grand promises crashed against technical limitations. The breaking point came in 1973 when British mathematician James Lighthill published a report questioning AI researchers' optimistic outlook. He argued that computers can achieve the level of experienced amateurs in games like chess and that common-sense reasoning would remain beyond their capabilities. The British government responded by ending support for AI research in all but three universities, and the U.S. Congress, already critical of high spending on AI research, followed suit. The field had learned its first hard lesson about the dangers of overpromising.

Second AI Cycle: Spring's Hope (1980-1987) leads to Winter's Frost (1987-1993)

The 1980s brought a new spring, as Japan's ambitious AI investments sparked a global race. The United States, unwilling to be left behind, poured resources into AI development through the Defense Advanced Research Projects Agency - DARPA. DARPA's research aimed to advance AI for national security purposes while also contributing to broader technological progress with potential applications in various fields. This period saw the proliferation of expert systems built on sophisticated if-then statements that still had fundamental limitations. The second winter arrived (1987-1993), triggered by a familiar over-promising and under-delivering pattern marked by scepticism about AI's fundamental approach. Insufficient computing power, limited parallel processing capabilities, and data storage capacities hampered the field. Why was this a limitation?

Data is often called the lifeblood of artificial intelligence - as essential as oil for machines or air for living beings. Without sufficient data, AI systems cannot learn, adapt, or function effectively. However, training AI on vast datasets requires enormous computational power, as these systems must process millions of examples to identify meaningful patterns. This computational requirement created a significant bottleneck in AI development in late 1980th. First, early computers could only execute one operation at a time, rather than the millions of simultaneous calculations possible today. Second, they lacked the processing power to handle massive datasets and the storage capacity to retain the billions of data points necessary for AI to recognise patterns and make accurate predictions. Finally, even if the computing power had been available, there simply wasn't enough digitised data to train AI systems effectively. These hardware limitations effectively stalled AI advancement until more powerful computing systems emerged.

The long-lasting Spring: Big Data and Deep Learning (1993-Present)

Every winter carries the seeds of spring. The 1990s saw several crucial developments: the rise of Enterprise Resource Planning systems – ERP, generating well-structured data and the exponential growth of computing power following Moore's Law, describing that processors become twice as powerful and half as costly every two years.

While early AI research was largely fuelled by government funding, particularly in the US and UK, the landscape began to shift in the late 20th century. Several factors contributed to this transition. Firstly, initial enthusiasm waned as early AI systems failed to meet lofty expectations, leading to government funding cuts. Secondly, advancements in computing power, data availability, and algorithms created new opportunities for commercially viable AI applications. Businesses began to recognise the potential of AI to gain a competitive advantage. As a result, private investment in AI surged, with companies like Google, Microsoft, and Amazon pouring billions of dollars into AI research and development. This shift was further accelerated by the increasing availability of data, often held within the private sector, which is crucial for training AI models. Today, while government funding still plays a role in basic AI research, the majority of AI development is driven by businesses seeking to capitalise on its transformative potential.

This extraordinary acceleration in processing capability and funding shifts, combined with the emergence of the internet creating vast new data sources, led to dramatic results. In 1997, IBM's Deep Blue, developed in New York, made history by defeating the world chess champion.

The following decades brought increasingly sophisticated AI applications. France's Aldebaran Robotics introduced the interactive Nao humanoid robot in 2006, while Apple revolutionised mobile interfaces with Siri in 2011. Another milestone came in 2016 when Google DeepMind's AlphaGo, developed in London, achieved what many thought impossible - defeating world champion Lee Sedol at Go, a game far more complex than chess.

Then came the moment we discussed earlier - 2022, when OpenAI's ChatGPT launched and captured global attention, making sophisticated AI accessible to the general public. This sparked a wave of innovation in generative AI, with companies like Anthropic releasing Claude, Google introducing Gemini, and Meta developing Llama models. The Chinese tech sector also made significant strides, with companies like Baidu releasing ERNIE and ByteDance developing their models.

DeepSeek, a Chinese AI company, recently disrupted the AI landscape by developing and releasing its model. They claimed a significantly lower development cost of $6M, a striking contrast to the typical expenses associated with building advanced AI models (hundreds of millions of dollars). This cost reduction was attributed to their approach of using model distillation, where a smaller model learns from a larger one. However, the claimed "X times cheaper" figures sparked intense debate in the AI community, with experts questioning the trade-offs between model performance, efficiency, and potential vulnerabilities. The company's decision to make its model open source and freely available for private deployment marked another step toward democratising AI development while also raising important discussions about responsible AI governance and deployment.

Today, AI development has become more than just a technological race—it's a geopolitical chess match. Nations vie for AI supremacy, understanding that leadership in this field could determine economic and strategic power for decades to come. The dance continues, but now on a global stage, with higher stakes than ever.

While AI now permeates our daily lives - from Alexa answering our questions and helping with simple tasks, Tesla cars navigating our streets, to Netflix algorithms personalising our entertainment - this blooming spring of AI could face its own potential winters. Many challenges loom: the growing environmental impact of training large AI models, governance, ethical, technological and other constraints. And while we are enjoying this spring, this article comes to an end. I hope that by tracing its journey from winter to spring, this article helped to clarify where we've been, where we are, and the challenges we must thoughtfully navigate as we move forward.

AI Tools Used in This Article

During my studies at Oxford, I work with a vast array of information sources: from academic articles, textbooks, and monographs to specialized curriculum literature, lecture materials, industry reports, analytical reviews, and research publications. In my writing process, I synthesise information from dozens of these diverse sources not only to develop comprehensive analyses, but also to explain complex concepts in a clear and engaging way.

In today's information-rich world, avoiding AI tools out of fear of 'artificiality' means deliberately limiting yourself. I actively integrate AI tools into my daily work, and it helps to boost my efficiency and productivity:

- NotebookLM — for processing research materials and primary sources, helps highlight key points and create thorough reviews;

- Claude (AI from Anthropic) and/or Perplexity.ai — help me with article structuring and translation;

- Grammarly Premium — I use it for proofreading;

- Imagen — for creating AI-generated images;

- ElevenLabs — for creating audio versions in English using an artificial voice based on my (Vira Larina's) voice.